ETL is the Best API integration services one of the most important and lengthy components of information warehousing. BiG EVAL is a total suite of software program devices to leverage organization data's worth by frequently verifying and keeping an eye on high quality. It ensures automating the testing jobs throughout ETL as well as supplies quality statistics in manufacturing.

Workflow Orchestration Global Market Report 2022: Rapid Utilization of Workflow Orchestration for Streamlining Digital Payment Activities Drives Growth - Yahoo Finance

Workflow Orchestration Global Market Report 2022: Rapid Utilization of Workflow Orchestration for Streamlining Digital Payment Activities Drives Growth.

Posted: Thu, 13 Apr 2023 07:00:00 GMT [source]

It can connect with 100+ sort of data resources that you can connect and manage by means of a single system in the cloud or on-site. Data warehouses became part of the scene in the 1980s and also supplied integrated access to data from numerous diverse systems. However the concern was that lots of data warehouses demanded vendor-specific ETL tools. So organizations opted for different ETL tools to make use of with different information storehouses. [newline] At Cognodata we have 20 years of experience in information monitoring and analysis. We analyse and layout strategies through artificial intelligence and also artificial intelligence. This is the final phase of the procedure, where the changed data is filled right into the target system so that all locations of the organisation can be fed with info.

Requirement Etl Automation Tester Abilities

They enable business to draw out information from various sources, clean it and also load it right into a brand-new destination successfully as well as relatively easily. Additionally, these devices often include functions that assist take care of errors and ensure that data is exact and constant. The ETL procedure is a method made use of to incorporate, clean and prepare information from numerous sources to make it easily accessible and also functional for further evaluation.

You can reduce the moment it takes to get understandings from months to weeks. The entire ETL procedure brings structure to your business's details. This permits you to invest more time analyzing unique questions as well as acquiring brand-new insights, rather than trying to do treatments to get valuable data at each phase. As an example, determine the lifetime worth of the consumers at data sets import, or the variety of their consecutive acquisitions. Every API is developed differently, whether you are making use of applications from giants like Facebook or small software program companies.

The 19 Best Big Data ETL Tools and Software to Consider in 2023 - Solutions Review

The 19 Best Big Data ETL Tools and Software to Consider in 2023.

Posted: Wed, 19 Oct 2022 07:00:00 GMT [source]

ETL processes information in sets, while ELT can manage continuous streams of information. ELT masters refining large information streams at scale, supplying real-time understandings for vibrant decision-making. It supports most on-premise and cloud data sources with ports to numerous software-as-a-service offerings. However, its applications are broadening past just transporting information, with information movement for brand-new systems, in addition to information assimilations, kinds, and joins, ending up being more preferred. The Essential Duty of Information Modeling In the quickly progressing electronic age, artificial intelligence has actually become a game-changer, deeply influencing business landscape.

Dataops Highlights The Demand For Automated Etl Screening (Component

These are just a few of the most important advantages of automating data assimilation. They are all compelling and show the value of the innovation not only to the technical implementation team yet also to the business neighborhood. Automating your ETL processes is the only means to accomplish this. By catching all the technical metadata and also ensuring its accuracy as well as money, automated ETL offers one more audience well-- the information administration feature. ETL automation sustains the technological team as they transfer to adopt an extra repetitive as well as agile technique.

- Not just this, you will certainly obtain constant details throughout all these applications.

- Information replicationcopies modifications in data sources in real time or in sets to a central database.Data replicationis frequently provided as an information combination technique.

- See exactly how ActiveBatch's work automation aids make certain the highest security requirements for information extraction and also even more.

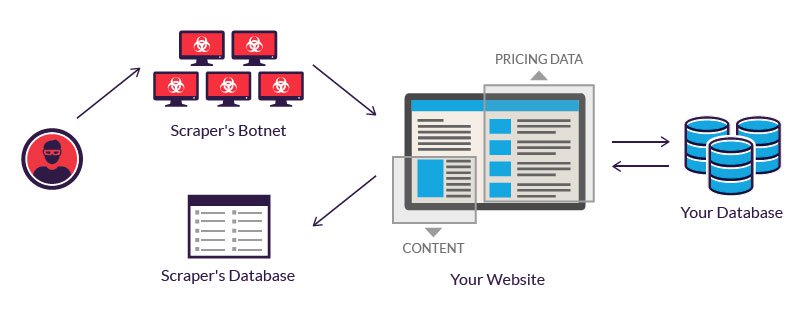

ETL automation's challenges are commonly intertwined with the benefits covered above. Therefore, organizations should recognize the difficulties when maximizing ETL automation. For instance, if 2 stores combine their endeavors, they might have numerous suppliers, partners, and consumers in common. Plus, they can have data concerning all those entities in their corresponding information repositories. Nevertheless, both parties may make use of different information databases, and also the information kept in those databases may not always agree.

See how teams make use of Redwood RunMyJobs to speed up ETL as well as ELT procedures through automation. Usage CasesCompose Automations with Integrations & ConnectorsBuild procedures in mins making use of a considerable collection of consisted of assimilations, layouts, as well as wizards. This blog site discusses the 15 best ETL tools currently offer on the market. Based upon your needs, you can leverage among these to increase Unlock Valuable Insights with Custom Web Scraping your productivity via a marked improvement in operational effectiveness.

Gdpr Information Mapping: Exactly How To Decrease Information Personal Privacy Risks

For some variables, the value contains unnecessary text which needs https://writeablog.net/cyrinaxwip/also-without-new-information-sources-the-collection-of-existing-data-resources to be removed. For instance, for variables emp_length as well as term, clean-up is done by eliminating unnecessary text and also transforming them to float type. Dummy variables are developed for distinct variables, e.g., function of the financing, home ownership, quality, sub-grade, verification condition, state, and so on. If there are a lot of classifications or two comparable classifications exist, a number of dummies are wrapped right into one based on similar distress. The weight of evidence of different variables is examined to examine if any type of grouping of classifications is required or not.